Figure 10: Environmental mapping (a) simple texture mapping (b) sphere mapping Another way of accurately computing the environmental map is to use standard projections [Ang03]. In the simplest case of an AR indoor environment at least six cameras are required to estimate six projections [Vin95]. Each projection corresponds to each surface of the indoor environment (i.e. a kiosk environment inside a museum). Using all six projections a single environmental map can be generated. This can be then easily applied to the model as a single texture.

5.2. Planar Reflections

To realistically model reflections in AR heritage environments, many issues must be taken into account. For example, in reality the light is scattered uniformly in all directions depending on the material.

FOTIS LIAROKAPIS

Realistic Rendering of Augmented Reality Heritage Exhibitions

Realistic Rendering of Augmented Reality Heritage Exhibitions/F.Liarokapis. CyberEmpathy: Visual Communication and New Media in Art, Science, Humanities, Design and Technology. ISSUE 8 /2014. Augmented Reality Studies. ISSN 2299-906X. Kokazone.

Mode of access: Internet via World Wide Web.

Source: www.fotisliarokapis.blogspot.com

Abstract:

The preservation of cultural heritage is a key concern for museums and other cultural heritage institutions. Another important issue is the limited space available to exhibit their collections to their visitors. Although a number of experimental systems based on advanced information and communication technologies such as Web3D, virtual reality and augmented reality have been previously developed they have not really managed to become popular mainly because they were used for passive viewing and limited interaction. This paper presents how realistic augmented reality kiosk exhibitions of museum collections, including galleries and artefacts, can be developed so that they can attract the visitor’s attention. Advanced computer graphics rendering algorithms such as interactive lighting and shading, fake, soft and hard shadows as well as reflections are combined with a high-level augmented reality tangible interface presented in real-time performance.

CyberEmpathy ISSUE 8 / 2014: Augmented Reality Studies

1. Introduction

The majority of heritage institutions such as museums and art galleries has realised that Information and Communication Technologies (ICTs) have the potential, if used properly, to attract a large number of visitors and promote their exhibits in a better way. Each institution makes different use of ICT technologies depending on its needs, resulting in variety in the presentation and interaction styles available. For example, most museums and art galleries have invested on touch-screen displays with simple representations as the main presentation medium without investigating the capabilities of more advanced ICT such as augmented reality (AR). Also, a number of pilot projects have been developed by universities but their results were largely disappointing [Arn00]. Part of the problem lies on the isolation or lack of communication between heritage institutions and universities as well as on the diversity of the technologies used. Open standards such as XML, VRML and Web3D, as well as computer graphics techniques seem to have clear benefits to assist archaeologists in their professional work [Arn00], [WM*04] and attract the visitor’s attention. In particular, 3D archaeological reconstruction techniques have demonstrated impressive results focusing on the fine detail and high level of realism. Based on these accurate and realistic 3D representations, a number of virtual museum exhibitions have been developed [SL*05]. The concept of AR exhibitions has been around for a few years now and researchers have designed a number of prototypes [BA*99], [Gat00], [HC*01], allowing for more advanced representations (i.e. table-top AR exhibitions) and interaction (i.e. natural techniques). An overview of the most significant work in virtual and augmented museum exhibitions has been previously documented [SL*05]. In addition, Huang et al (2005) developed a tangible photorealistic virtual museum system that allows visitors to interact naturally and have an immersive experience with museum exhibits [HCC05]. The ARCO project has developed realistic virtual and augmented presentations of museum galleries and their exhibits through the use of tangible AR interfaces [WM*04], [LS*04]. Another interesting approach is the Virtual Showcase which is a projection-based AR display system [BF*01]. The Virtual Showcase contains real scientific and cultural artefacts allowing their 3D graphical augmentation. The virtual part of the showcase can react in various ways to a visitor, which provides the possibility for intuitive interaction with the displayed content. However, all of the above prototypes ‘suffer’ from advanced realistic rendering techniques. Realism was achieved only through 3D reconstruction techniques resulting in AR exhibitions that give a distant feeling to the visitors. The aim of this work is to provide a list of advanced rendering techniques and illustrate how to develop realistic AR kiosk exhibitions of museum collections, including galleries and artefacts in order to attract the visitor’s attention. Based on previous prototypes [LS*04], [Lia07], a number of realistic techniques such as generation of interactive lighting, F. Liarokapis / Realistic Rendering of Augmented Reality Heritage Exhibitions 2 shading, shadows and reflections have been designed into a single AR interface. The remainder of this paper is organised as follows. Section 2, presents the most important requirements for the creation of indoor AR kiosk exhibitions. Section 3, shows how to design realistic augmentations using interactive lighting, shading and transparency. Section 4, presents a methodology for generating fake, hard and soft shadows whereas section 5, illustrates how to generate environmental and planar reflections. Finally section 6, presents conclusions and future work.

2. Requirements for AR Kiosk Exhibitions

Museums and other heritage exhibitions need to exhibit their collections with the aim of educating and entertaining their visitors [MK*97]. However, they usually contain large collections of artefacts and information presented in different locations [BB*01]. One of the most challenging objectives is the preservation of our history and culture, in respect to time and place. To achieve this, two conditions must happen: the 3D representation must be accurate and the presentation realistic. Moreover, the input of archaeologists and museum curators is necessary to define the AR presentation style in terms of the user as well as the museum requirements. In particular, they should know the attractiveness of each artefact and the usefulness of the labels [BS95]. On the other hand, from the visitor’s perspective, they require immediate, realistic and easy access to the AR information in real-time performance. It is important for them to feel related with the augmented information as it is part of the real environment. Technically speaking, the most important requirements concerning the effectiveness of an AR exhibition relate to the quality of visualisation and interaction techniques. Effective interaction techniques for indoor AR environment have been previously presented [Lia07] and apart from them, the success of a kiosk exhibition is highly related to the level of realism achieved. Properties of the real world such as lighting, shading, shadows and reflections must be therefore reflected to the AR kiosk exhibition.

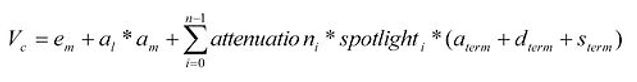

3. Realistic Augmentation

Realistic augmentation is an issue of high importance for the AR interface system. The major focus was to realistically augment 3D heritage scenes. To increase the realism of the AR scene, artificial lighting was carefully integrated. The precise manipulation of the light sources allowed for the generation of real-time shadows and reflections. Other effects implemented to enhance realism, such as atmospheric effects and transparency, were necessary for simulating reality. 3.1. Interactive Lighting and Shading 3D modelling techniques have advanced to a great extend so that the common illumination model can be predetermined and saved in a specific computer graphics file format (i.e. 3ds, VRML). Thus, realism is an issue that is highly dependent on modelling capabilities. In cases where the illumination model is not pre-computed an approximation model can be used. The real world is comprised of objects that have different characteristics. For example, some objects are extremely shiny whereas others are not. To succeed in realistic AR rendering, the common illumination model has to be controlled interactively. During recent years much research work has been performed in this field [LDR00][DRB97]. The biggest problem is to automatically match the virtual lighting information with the real world. In this work, this is done based on user-input by controlling the position and characteristics of the light(s) in the AR environment. To estimate the lighting model OpenGL’s lighting model is addressed because it is one of the industry’s standard and can easily produce quite impressive results. This model divides lighting into four independent components: ambient, diffuse, specular and emissive. Light sources in OpenGL have several properties including colour, position and direction. To light the virtual scene more, multiple light sources can be added to the environment. The equation used for the entire calculation in RGBA mode [SW*99] is presented below:

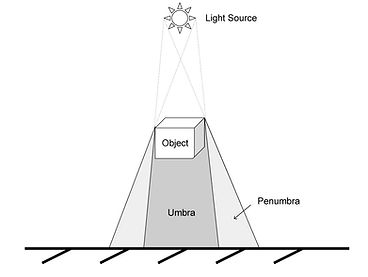

Figure 3: Shadow generation

A way to simulate soft shadows is to use the shadow map algorithm. In general, soft shadows are preferred because the soft edges make the user aware that the shadow is indeed a shadow. On the other hand, hard- edged shadows can be confusing and give the illusion of actual geometric features. Shadows projected on curved surfaces are considered to be an extension of planar shadows. Finally, another popular solution is to use a generated shadow image as a projective texture [Mol99].

4.2. Fake Shadows

Fake shadows are very easy to generate and even if they are blurred or inaccurate, they can give an estimation of the depth [Mol99]. The easiest was to implement fake shadows is to project a 3D shape, which is similar to the rendered object, on the ground and the paint it black. In most of the applications, a simple geometrical shape is usually enough. Although the most popular shape is the sphere, other shapes can produce quite effective results such as the torus as illustrated in Figure 4.

where S is the location of a point of the plane. Next the straight that passes from the plane point in the direction of the light source is defined by the equation of a straight line: the equation [Wat99] below:

Where LI is the light incident at a surface, LR is the reflected light, LS is the light scattered, LA is the absorbed light and LT is the transmitted light. Reflection is a rendering technique that generates images that look similar to ray-traced images without having to trace the reflected rays. This implies that an instance of the real world is painted onto a surface as it is rendered [Ang03]. In this work, two different types of reflections have been implemented including environmental reflections and planar reflections.

5.1. Environmental and Spherical Mapping

Environmental mapping, also known as reflection mapping, is a simple and effective method of generating approximations of reflections in curved surfaces [Mol99]. It is usually classified as category of texturing technique and can be considered as a simplification of ray tracing [Wat99].The difference with ray-tracing is that this mapping makes use of the direction of the reflection vector as an index pointing to an image that contains the environment [Mol99]. An illustration of how environmental mapping works is illustrated in Figure 9.

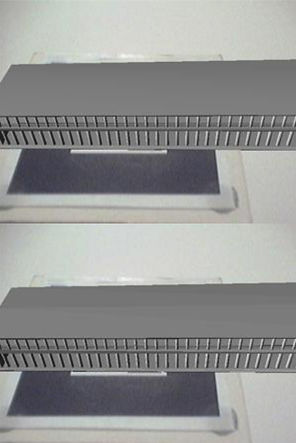

where Vc is the colour of each vertex, em is the emission of the material, αl is the ambient light model, αm is the ambient material, αterm is the ambient term, dterm is the diffusion term and sterm is the specular term. Shading is used for determining the pixels’ colour based on lighting computations [Mol99]. There are three basic types of shading including flat, smooth and phong shading [SW*99]. In flat shading the colour of one particular vertex is duplicated across all the primitive vertices. This method is very simple and works very fast but it does not give a smooth appearance to curved surfaces because all pixels in the polygon as shaded the same. On the other hand, smooth shading, also known as Gouraud shading, is one of the most popular shading algorithms which interpolates light intensities across the face of a polygon using values taken from its vertices [Mol99]. Gouraud shading is slower than flat shading but it produces a smoother appearance across polygons as presented in Figure 1.

Figure 1: Shading modes in AR (a) flat (top view) (b) smooth (bottom view)

Phong shading interpolates the normals across each polygon instead of interpolating the vertex intensities as performed in smooth shading. Although phong shading produces smoother results, it has the drawback of having greater computational cost and usually is performed off-line [Ang03]. It is worth-mentioning that shading is more appropriate when the virtual artefacts do not include any texturing information.

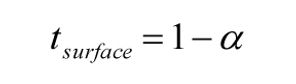

3.2. Transparency

The real world is composed of three types of objects: transparent, translucent and opaque objects. The most popular method for adding transparency information for translucent objects is alpha blending. Alpha blending is generated by rendering polygons through a predefined mask whose density is proportional to the transparency of the object. When the transparency effect is applied successfully, the resultant colour of a pixel is a combination of the foreground and background colour. The general equation for alpha, which has a normalized value of 0 to 1 for each colour pixel, is illustrated below:

where tsurface is the transparency of the surface of the object. In AR environments, transparency is an effect that can be applied very successfully in some types of applications or scenarios [BNB05], [Lia07]. The 3D models used must be created in such a way so that they contain transparent surfaces. Therefore, the rendering part, transparent objects is achieved through the alpha blending mechanism.

where α is the opacity of the surface, pAx,y is the colour of the pixel A and pBx,y is the colour of the pixel B. Instead of using ray-tracing rendering, opacity can be used instead [Ang03]. This method is based on the control of the alpha channel (α) of the colour mode (RGBA). More specific, when blending is performed, the value of alpha channel determines the value of each of the red, green and blue (RGB) components. The amount of light that penetrates through surfaces is called opacity. When the opacity of a surface is zero then the surface is transparent. The transparency of a surface can be calculated by the following formula:

Figure 2: Transparency levels in AR (a) less transparent (80% alpha blending) (b) more transparent (20% alpha blending)

Figure 2 shows the same textured object with different levels of transparency. Figure 2, (a), shows a 3D artefact with the alpha value close to unity (80% or 0.8) blending while in Figure 2, (b) presents the same 3D artefact with alpha value close to zero (20% or 0.2) blending. This effect is very useful when occluding virtual artefacts are overlaid in a kiosk environment. Similarly, to commercial video games, using transparency it is possible to make the virtual artefact that occludes the others so that all artefacts are visible. In addition, transparency can be very successfully applied for achieving other visualisation effects such as reflections (see section 5).

3.3. Atmospheric Effects

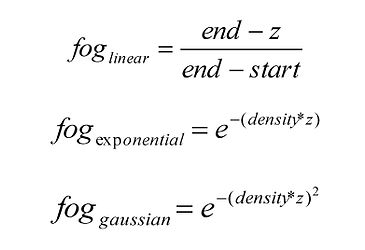

Special effects are an area of continuing research and development. The entertainment industry (films and games mainly) has made many advances during the last years. Fog is an existing 3D computer graphics effect that can be used to create the illusion of partially transparent space between the camera and the 3D object. This can be easily achieved by blending in the environment, a distance dependent colour that is defined as fog factor f [Ang03]. Using an atmosphere intensity attenuation function the rendered information can be simulated through a hazy or smoky atmosphere [Bea04].The three most popular types of fog densities are:linear, exponential and Gaussian. The equations usedfor the different variations of the fog effect areillustrated following [SW*99]:

where z is the camera’s coordinate distance between the viewpoint and the fragment centre and end and start are specified as the boundary limits correspondingly. Fog can be generated by blending a RGB fog colour with one of the above blending factors.

4. Shadow Generation

Shadows play a very important role for the generation of a realistic AR kiosk environment. In reality, all artefacts have their shadows so virtual artefacts should have as well. Two of the most important shadow algorithms [Mol99] can be distinguished in two types: planar and curved surface shadows.

4.1. Planar and Curved Surfaces Shadows

Planar shadows are the simplest case of shadowing into planar surfaces. Hard shadows may be generated using only point light sources but to create soft shadows then area light sources have to be used. In the real world, shadows consist of a fully shaded region, called the umbra and a partially shadowed area, called the penumbra [Vin95] as illustrated in Figure 3.

Figure 4: Fake shadow generation using (a) Torus (left view) (b) Sphere (right view)

On both images of Figure 4, a virtual torus is superimposed on a marker card but different fake shadows are projected onto the ground. The fake shadow presented in Figure 4, (a) is represented using a torus while in Figure 4, (b) the shadow is represented using a sphere. The difference between these two types is on the appearance of the virtual shadow. The sphere produces much smoother results but this is not always the case. Other geometrical shapes that have been experimentally tested include the square, the cube, the cone, the pyramid and the teapot. Although this type of shadows is sufficient for some applications, it cannot be used in cases where realism is an important factor. To realize a more realistic augmentation, more advanced shadowing techniques are required, such as planar shadows.

4.3. Planar Shadows

Realistic shadows are quite difficult to implement in real-time [Vin95] but planar projection shadows can produce very nice effects without having to account for the generation of soft shadows. Even if there are many algorithms for generating shadow algorithms like ray- tracing, projection, shadow volumes and shadow maps [AT*01] this work concentrates on a general solution for shadows projected onto a kiosk presentation environment.

Figure 5: Planar shadow projection

As illustrated in Figure 5, the location of the shadow can be calculated by projecting all the vertices of the AR object to the direction of the light source. To generate augmented shadows an algorithm that creates a 4x4 projection matrix, Ps, in homogeneous coordinates must be calculated based only on the plane equation coefficients and the position of the light [Mol99]. Say that L is the position of the point light source; P the position of a vertex of the AR artefact where the shadow is cast; and n the normal vector of the plane (Figure 6).] below:

Figure 6: Calculation of the shadow projection

As a first step, a plane needs to be expressed as:

Figure 7: Hard shadows (a) Canonical shape (cube, left) (b)

Non-canonical shape (tree, right) The main disadvantage of this algorithm is that it renders the virtual information twice for each frame: once for the virtual object and another one for its shadow. Another obvious flaw is that it can cast shadows only into planar surfaces but with some modifications, it can be extended to be applied to specific cases such as curved surfaces.

4.4. Fake Shadows

Although hard shadows will increase the level of realism to the AR scene, there are still a lot of issues for achieving realistic rendering. In theory, any projection matrix which generates hard shadows can be used for the generation of soft shadows [Mol99]. To generate the effect of soft shadows the same principle may be applied with some modifications. The shadow matrix needs to be calculated only once, but the augmentation of the matrix must be performed for some 3D points that much have a small displacement from the origin and can cover the area near the hard shadow as illustrated in Figure 8.

Solving for γ and substituting into the above equations, the shadow projected point, P’, contains the correct projection as shown below:

In order to turn this into a matrix, it is necessary to follow the projection matrix convention.below:

Based on the above equation, the transpose of the projection matrix that calculates the shadow [MB99] is illustrated below:

where Lp•Pc is the dot product of plane and light position. The projection matrix has a number of advantages compared with other methods such as fake shadows. The most important is that it work fast and it is generic so that it can generate hard shadows in real- time for any type of objects independently of their complexity. Two example screenshot that illustrates a 3D representation of a simple cube (Figure 7, a) and a tree (Figure 7, b) is shown in Figure 7.

Figure 8: Approximation of soft shadows A

lthough this technique is not very computationally expensive, it does not provide very realistic results. However, if a large number of displacements are used, then it is possible to improve the visualisation results. To achieve more realism other techniques, such as a frustrum-based method, need to be employed [Mol99]. 5 Reflection Generation Reflections are one of the most important ingredients for realistic computer graphics visualisation [Mol99]. The general lighting equation also called the rendering equation accounts for reflections and shadows [Kaj86]. The light reflection depends on four different factors as shown from the equation [Wat99] below:

Figure 9: Environmental mapping

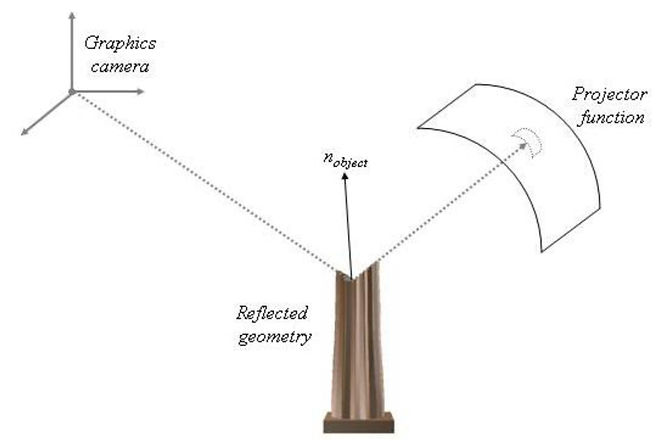

In Figure 9, the graphics camera represents the user and is defined as a vector c, the normal of the object is defined as N and the reflection vector as r. Using these attributes, the reflection vector of the viewer can be computer using the following equation [Ang03], [Mol99]:

r = 2(N * c)N - c

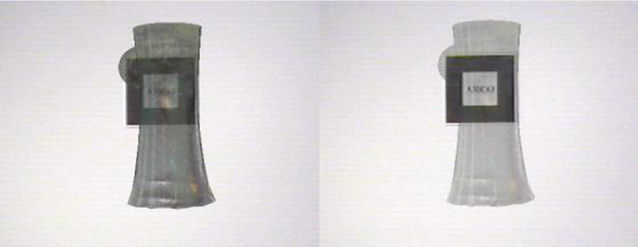

where c represents the normalised vector from the graphics camera to the location of the surface, and N is the surface normal at that location. Although, the main limitation of environmental mapping is that the artefacts near the reflector can not be reflected accurately it can also be used to give limited recursive reflections [MB99]. Additionally, it is possible to achieve a variation of the above method, called spherical mapping [Ang03]. The idea is to map the environment into a sphere using orthographic projection and then generate a texture. In this work, the image is stored in image memory and the texture coordinates are generated automatically. The main advantage of this technique is that it is simple to implement and provides a rough estimation of reality. Two example screenshots illustrate how spherical mapping can be applied on a textured 3D virtual artefact. Figure 10, (a), shows a 3D artefact with simple texturing applied, while Figure 10, (b) illustrated the same 3D artefact with sphere mapping.

Figure 11: Reflection in a plane

In this work, the effect of mirror reflections has been implemented. Based on the capabilities of the stencil buffer, a reflection of the object is performed onto the virtual ground which is user-defined. The stencil buffer is initially set sixteen bits in the pixel format function. Then, the buffer is emptied and finally the stencil test is enabled.

Figure 12: Transparency in a user-defined plane

In Figure 12, a 3D object is overlaid on a marker card and its planar reflection is projected on a user defined plane. Transparency is used to the virtual plane in order to emphasise the appearance of virtual artefact. To enhance the realism of the AR reflections, hard shadows can be projected onto the same plane.

6. Conclusions and Future Work

Realism in AR exhibitions is one of the means that museums can use to so attract the visitor’s attention. This paper has illustrated how realistic AR kiosk exhibitions of museum collections, including galleries and artefacts, can be developed in real-time performance. Advanced computer graphics rendering algorithms such as interactive lighting and shading, fake, soft and hard shadows and reflections are standard features that must be included in any augmented exhibition. In the future, shadows and reflections will be extended to operate in curved surfaces. In addition, more advanced rendering techniques will be implemented including level-of-detail, collision detection and ray- tracing. Finally, a lite version of the AR interface will be ported into a handheld device such as smartphones and personal digital assistants (PDAs) allowing for mobile AR realistic rendering inside museums.

Acknowledgments

The author would like to thank the Department of Creative Computing at Coventry University as well as the Centre for VLSI and Computer Graphics at the University of Sussex for their support and inspiration.

References

[Ang03] Angel, E., Interactive Computer Graphics: A Top-Down Approach Using OpenGL, Addison-Wesley, Third Edition, 17-18, 69, 107, 322-349, 472, (2003).

[Arn00] Arnold, D.B., Computer Graphics and Archaeology: Realism and Symbiosis, In Joint ACM SIGGRAPH and EUROGRAPHICS Campfire on Interpreting the Past in Preconference Prroceedings, 10-21, (2000).

[AT*01] Andersen, D., Thomsen, C., et al., ARS- Generator - An augmented reality shadow generator, Report, Aalborg University, Department of Computer Science, 4th Sep-19th Dec, (2001).

[BA*99] Brogni A., Avizzano C.A., et al., Technological Approach for Cultural Heritage: Augmented Reality, Proc. of the 8th Int’l Workshop on Robot and Human Interaction, Pisa, Italy, (1999).

[BB*01] Baber, C., Bristow, H., et al., Augmenting Museums and Art Galleries, Human-Computer Interaction INTERACT '01, 439-447, (2001).

[Bea04] Beaker, H., Computer Graphics with OpenGL, Pearson Prentice Hall, Third Edition, 644, (2004).

[BF*01] Bimber, O., Frohlich, B., Schmalsteig, D., and Encarnacao, L.M., The Virtual Showcase, Computer Graphics and Applications, IEEE Computer Society, 21(6): 48-55, Nov/Dec, (2001).

[BNB05] Buchmann, V., Nilsen, T., and Billinghurst, M., Interaction With Partially Transparent Hands And Objects, Proc. of the Sixth Australasian conference on User interface, ACM Press, Volume 40, Newcastle, Australia, 17-20, (2005).

[BS95] Boisvert, D.L., and Slez, B.J., The relationship between exhibit characteristics and learning-associated behaviours in a science museum discovery space, Science Education, 79(5), 503-518, (1995).

[DRB97] Drettakis, G., Robert, L and Bougnoux, S., Interactive Common Illumination for Computer Augmented Reality, Proceedings of Rendering Techniques '98 (Eighth EG Workshop Rendering), J. Dorsey and P. Slusallek, eds., 45-56, June, (1997).

[Gat00] Gatermann, H., “From VRML to Augmented Reality Via Panorama- Integration and EAI-Java, in Constructing the Digital Space”, Proceedings of the SiGraDi, 254-256, September (2000).

[HC*01] Hall, T., Ciolfi, L., et al., The Visitor as Virtual Archaeologist: Using Mixed Reality Technology to Enhance Education and Social Interaction in the Museum, Proc. of Virtual Reality, Archaeology, and Cultural Heritage, ACM Press, Glyfada, Nr Athens, November, 91-96, (2001).

[HCC05] Huang, C.R, Chen, C.S, Chung, P.C., Tangible Photorealistic Virtual Museum, Computer Graphics and Applications, IEEE Computer Society, January/February, 25(1): 15-17, (2005).

[Kaj86] Kajiya, J.T., The rendering equation, Computer Graphics (SIGGRAPH), 20(4): 143-150, August, (1986).

[LDR00] Loscos, C., Drettakis, G., and Robert, L., Interactive Virtual Relighting of Real Scenes, Transactions on Visualisation and Computer Graphics, IEEE Computer Society, 6(4): 289-305, (2000).

[Lia07] Liarokapis, F., An Augmented Reality Interface for Visualising and Interacting with Virtual Content, Virtual Reality, Springer, 11(1): 23-43, (2007).

[LS*04] Liarokapis, F., Sylaiou, S., et al., An Interactive Visualisation Interface for Virtual Museums, 5th International Symposium on Virtual Reality, Archaeology and Cultural Heritage, Eurographics Association, Brussels, Belgium, 6-10 Dec, 47-56, (2004)

[MB99] McReynolds, T., and Blythe, D., Advanced Graphics Programming Techniques Using OpenGL, SIGGRAPH `99 Course, (1999).

[MK*97] Mase, K., Kadobayashi, R., et al., Meta-Museum: A Supportive Augmented-Reality Environment for Knowledge Sharing, ATR Workshop on Social Agents: Humans and Machines, Kyoto, Japan, April 21-22, (1997).

[Mol99] Moller, T., Real-Time Rendering, A K Peters Ltd, 23-38, 171, (1999).

[SL*05] Sylaiou, S., Liarokapis, F., et al., Virtual Museums: First Results of a Survey on Methods and Tools, CIPA XX Symposium, Torino, Italy, Sept 26-1 Oct, 1138-1143, (2005).

[SW*99] Shreiner, D., Woo, M., et al., OpenGL Programming Guide: The Official Guide to Learning OpenGL, Version 2 (5th Edition), Addison Wesley, August, (2005).

[Vin95] Vince, J., Virtual Reality Systems, Addison-Wesley Publishing Company, 180-182, 288-292, (1995).

[Wat99] Watt, A., 3D Computer Graphics, Addision-Wesley, Third Edition, December 6, (1999).

[WM*04] White, M., Mourkoussis, N., et al., ARCO-An Architecture for Digitization, Management and Presentation of Virtual Exhibitions, Proc. of the 22nd Int’l Conference on Computer Graphics (CGI'2004), IEEE Computer Society, Hersonissos, Crete, June 16-19, 622-625, (2004).

Fotis is the director of Interactive Worlds Applied Research Group (iWARG), Faculty of Engineering and Computing at Coventry University and a research fellow at the Serious Games Institute. He has contributed to more than 75 refereed publications with more than 600 citations and has been invited 60 times to become member of international conference committees and has chaired 13 sessions in 8 international conferences.Fotis is the editor in chief of the International Journal of Interactive Worlds (IJIW) and he is on the editorial advisory board of The Open Virtual Reality Journal. He has organised VS-Games 2009 (publications chair), the STARS session of VAST 2009 (chair of state-of-the-art reports), VS-Games 2010 (steering committee) and VS-Games 2011 (general chair). Finally, he is a member of IEEE, IET, ACM, BCS and Eurographics.

MORE:

There is so much more to check out :